We all know very well that the Virtual assistants like Siri, Alexa, Google Assistant etc are the stars of the companies designing mobile operating systems. However, now all these personal virtual assistants are said to be vulnerable to a new sophisticated attack through which hackers could easily hack your device.

Siri, Google Assistant, Alexa Said To Be Vulnerable To This New Attack

Virtual assistants like Siri, Alexa, Google Assistant etc are the stars of the companies designing mobile operating systems. However, voice may not be so necessary, at least there may be a way to subvert the concept of “voice” by using a nature trick, and thereby have a way of attacking devices that are predisposed to accept commands from the human voice.

The research we are now looking at is a team of Chinese scientists who have used dolphins’ natural ability to draw inspiration from a kind of ultrasonic attack on smartphones, cars and virtual assistants.

Applications such as the tech giant Apple’s Siri and Google Assistant are prepared to continually listen to commands and get started right away, though the method of entering these commands is not so subtle. This is because its genesis is the introduction of a voice command and the fact that “shouting” a command for the smartphone a command … is anything but elegant and discreet.

Thus, Zhejiang University decided to grab a standard voice command, convert it for use in the ultrasonic range (so humans can not hear it) and see if the device reacts to such information.

Dolphins inspire scientists

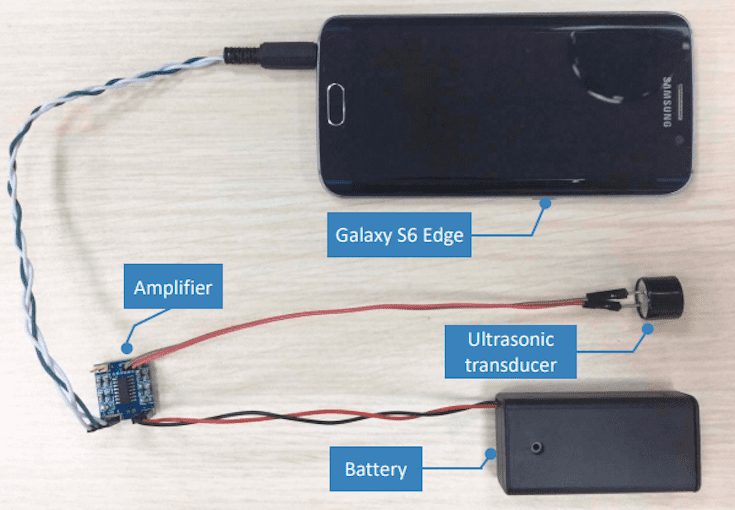

The method, dubbed DolphinAttack, takes advantage of the fact that human ears are useless when confronted with sounds well above 20 kHz. So the team added an amplifier, ultrasonic transducer and battery to an ordinary smartphone (total cost in pieces was around $3) and used it to send ultrasonic commands to voice-activated systems.

“By leveraging the non-linearity of the microphone circuits, the modulated low-frequency audio commands can be “demodulated,” retrieved and, most important, interpreted by speech recognition systems,” one of the researchers responsible for this experience.

The team validated DolphinAttack on popular speech recognition systems, including Siri, Google Assistant, Samsung S Voice, Huawei HiVoice, Cortana, and Alexa. They were also testing the automotive world to prove their concept, and in Audi’s car navigation system they found that using this method, the vulnerability was well-known in the system.

Where could this method proceed to an attack?

Because voice control has many possible functions, the team has been able to ask an iPhone to call a specific number – which is useful but not as useful as an attack. However, they could ask the device to visit a particular site that might contain malware. This method could even make virtual assistants dim the screen so that they hide the assault or simply put the device offline by putting it on airplane mode.

The biggest obstacle in the attack does not depend on the voice command software itself but on the audio capturing capabilities of the device. Many smartphones currently have multiple microphones, which makes a robbery much more effective.

As far as reaching, the biggest distance the team managed to make the attack was at 1.7 meters, which is certainly practical. Typically the signal is sent at a frequency between 25 and 39 kHz.

So, what do you think about this new flaw? Simply share your views and thoughts in the comment section below.